How to Plan a Successful Solution Test Interview

When my teams first start interviewing users, they struggle to analyze the results.

They aren’t organized in how they ask for user feedback. And so analysis takes too long.

To be more organized, a first reaction is to create detailed lists of questions to ask the user.

This results in an inquisition type interview where asking questions is the most important task to complete.

But the sheer volume of answers leaves the team wondering if the user even wanted what they were offering. They couldn’t see the forest (the user’s desire) because of the sheer number of trees (the number of answers collected).

What’s needed is a repeatable way to plan a solution test interview to flow naturally but also highlight the most important answers that teams are looking for.

Over time, I developed a structured introduction followed by a hypothesis-driven interview script that allows for flexibility but ensures that by the end of the interview, we have the answers we need from the user. Here’s how I plan these interviews:

Choose velocity over perfection

A well-run solution test can be scheduled for just 30 minutes.

After conducting 600+ interviews, long solution test interviews tend to create too much analysis work and are not focused enough.

If you are testing regularly then you do not need to cram everything into one user test.

30 minutes is enough time to have one user click through a couple different solutions and give a compare and contrast analysis.

We often let our work fill the time slot we give it. So give it a shorter time slot and see how you do.

Then you can fit 3 interviews into a 2 hour slot (with 15 minute breaks).

This makes for a repeatable weekly user interview slot that the whole team can plan on facilitating and attending.

And it's cheaper. I can usually sign up consumers for $25 for 30 minutes and specialty business users for $45.

To be time efficient, use Zoom for remote user testing

Using Zoom can save 10-15 minutes over using Teams.

Zoom has been installed and used by most households in the United States due to two years of remote work and remote family gatherings.

As a result, Zoom users show up on time and know how to find and use the controls to share screen, turn on video and adjust their microphone.

Teams is mostly used in the work environment and so many of our testers have never installed it or used it.

Teams users have a harder time installing it (doesn’t work as well on older computers) and have a harder time finding the controls. Teams is just not as user friendly as Zoom.

So save yourself 10 or minutes per user interview by using Zoom.

Some groups of users need a pre-interview call to get their video conferencing working

Often we have more folks than just ourselves who attend user interviews.

If the demographic of users we are interviewing has trouble getting the video conferencing software installed and working then we don’t want to waste a whole’s group time by watching the user just get set up with the tech.

So we do a pre-interview call and call the user in advance to get setup. This can happen with older users or with users in traditional industries who usually have much older computers at work.

This is most common when using Microsoft Teams. In the long run, it’s a better use of time and money to just use Zoom. Zoom is HIPAA compliant and by default requires the security of a password or a waiting room and has encryption and others security features available.

For remote interviewing, I find that having a large number of people attending the session does not make a difference to the user

Just have everyone (except the interviewer) turn off their video and mute their sound.

Again, folks are more and more comfortable on large Zoom calls due to the heavy use of video conferencing during the pandemic.

I encourage as many people as possible to attend live interviews. This is the surest way to get folks to experience user feedback rather than hoping they watch the recorded videos of the sessions.

When testing phone based apps in remote interviewing, use a desktop browser

Most of the prototypes that my teams are Mobile First and are made in a phone form factor.

But I prefer users to join the interview using their computer and test the prototype from there.

I have users join from their computer for the following reasons:

-- Capturing the user's facial expressions. I can more easily record the user's facial reactions when they are on a computer. A user’s video is usually not available during phone sessions that share a screen.

-- Less IT setup time. Users have a harder time turning on screen sharing on a phone and this can waste time during an interview.

-- Test results are comparable. User have reliably been able to give actionable phone feedback even when using a fake phone embedded in a desktop browser.

Figma and other UX tools can show the user a prototype embedded in a phone form factor in a desktop browser.

What do you want to learn from users?

Product teams often lose their way when creating prototypes.

They get absorbed into making the perfect solution.

To stay focused on learnings rather than the perfect solution, create hypotheses to guide your prototype creation and your solution test interview.

Making a Primary Hypothesis and Secondary Hypotheses

Always make multiple solutions. Before the first interview, create a Primary Hypothesis.

The Primary Hypothesis is the big question you want answered.

If you’re testing the text message above, the Primary Hypothesis might be:

“The user will want to contribute to this list and get the whole list as a reward.”

There are many potential solutions to test the willingness of users to contribute to the list. This is just one way.

During the solution test, we learn how the user wants to solve their problem or opportunity.

Did the user prefer solution A or solution B? Did the user prefer the push notification messaging from the first path or another path? Did they prefer using biometrics to authenticate or getting a code sent to their phone? Were they able to upload a photo of their receipt or did they prefer typing in the details?

All of these options presented to the user are the Secondary Hypotheses. They are our guesses as to how the user will solve their problem or opportunity.

An example of Secondary Hypotheses for the above prototype would be:

“User wants to click on the link in the text message to make their contribution”

“User knows who this text is from”

In repeated user testing, the Primary Hypothesis above had mixed results as only some people wanted to contribute to the list. The Secondary Hypotheses failed completely as no one wanted to click that link in a text message and people were skeptical that the message was really sent by their friend.

Make your hypotheses before the interview

The Primary and Secondary Hypotheses shape the prototype and they shape the interview flow.

The Primary Hypothesis captures the verdict from the user about the whole solution.

Each Secondary Hypothesis usually covers a screen or interaction that the user has with the solution.

Making hypotheses helps you stay focused during the interview on what really matters.

And it’s easier to have a two way conversation with users if you work from a list of hypotheses rather than a list of questions.

I found that when I work from a list of questions my interviews feel too much like an inquisition and not enough like a conversation. And users tend to offer information more freely in conversations.

At the end, tabulate the results for each user for each hypothesis. This helps you be more formal when analyzing a qualitative interview.

Interviewing users during solution tests needs a specific outcome that we can count on

How can we feel confident that we have a solution that works for the user?

The following techniques can add confidence to qualitative analysis.

Create a Compare and Contrast Moment

The main reason I have teams create multiple solutions is to create a compare and contrast moment.

It’s much easier for users to give high quality feedback when they’ve seen at least two versions of a way to solve a problem.

In fact, they are more likely to be able to come up with an interesting solution suggestion of their own after seeing more than one solution.

Teresa Torres in her Continuous Discovery Habits book has long advocated for multiple solutions as has Jake Knapp in this Sprint book (in the “Rumble” chapter).

However, the compare and contrast isn’t enough. Sometimes users will like one solution over another but they wouldn’t actually use EITHER solution.

Use the following questions to really test if they would use one of the solutions if it was built.

Ask for a form of commitment

Build up to a moment in the interview when you ask if the user would commit to using your offering.

Alberto Savoia, former Director of Engineering at Google, discusses the concept of getting a demand signal in his brief and useful videos. He also wrote the book, The Right It, that one of the few in-depth guides to Product Discovery. He refers to this as the user putting “Skin in the game.”

The idea is to ask the user to make a commitment not just give an opinion.

Avoid Yes/No questions since they are too easy to answer.

Instead, ask the user for something that is more difficult to part with than an opinion:

Enter a credit card into a realistic form to buy your offering

Download your app and create an account right now

Give you their email address

Commit to replace their current solution with yours

Declare a price point at which they switch to your solution

Make a monetary deposit (for example, Tesla collected a $2,000 deposit to get on a waitlist for a car that wouldn’t be delivered for over a year)

You don’t need to actually collect the credit card number or payment but seeing a user’s intention (or lack thereof) builds more confidence in a user’s true desire than just collecting their opinion.

That said, we always collect a final opinion from a user about our solutions since there is often not a simple way to gauge demand as shown above.

How you ask for the user’s opinion makes a big difference.

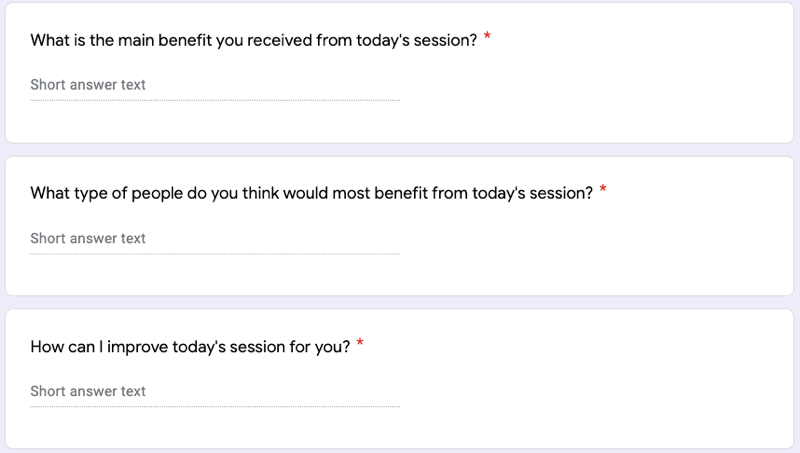

These are two qualitative survey questions that have been shown to predict success through registering an opinion.

Sean Ellis is an independent consultant who has popularized aggressively testing and evolving an offering to quickly determine if you’ve achieved Product-Market Fit.

He wrote the Hacking Growth book and invented the “Disappointment” question that is now widely used in Product Management to gauge user attachment to a solution. The phrasing has a formula and is slightly changed to address the specific solution being tested. In the example below, I was asking UC Berkeley grad students about the session I had just taught them.

The “Disappointment” question can be used to determine validation of a product or service and even for smaller concepts like a new feature.

Main question in the “Disappointment” survey

Follow on questions to understand the answer to the first question

Here is some background material:

Fred Reichheld from Bain and Company invented and extensively used the Net Promoter Score to understand the loyalty of users to a solution or concept. It capitalizes on the authenticity that people exhibit when they recommend something to someone else. They are putting their reputation on the line and so this question is meant to capture a numerical representation of that recommendation.

NPS does not fit all situations

NPS is good for validating a product or service

NPS doesn’t work as well for validating smaller things like a new feature

Since it’s based on virality, the product or service needs to lend itself to being recommended. Many products are widely used but not voluntarily recommended.

NPS doesn’t work when users aren’t allowed to talk to each other about their methods and processes such as in classified government work or highly secretive corporate work

NPS doesn’t work when users don’t feel comfortable sharing that they use certain medical services or products

Many users have online behaviors that they don’t want others to know about and so recommendation just doesn’t happen

Here is some background material:

Are you nervous about interviewing users?

Most people don’t start out as user interviewers. But most people can learn to be effective interviewers.

The easiest way to start is to use a templated script and speak slowly.

Most solutions have a predictable structure and a script can guide new interviewers.

Using a hypothesis-driven approach as described above will allow you to guide the conversation once you’ve gotten it started.

Before interviewing target customers, interview "friendlies"

“Friendlies” are usually coworkers and colleagues that are NOT involved in your project.

For entrepreneurs and students, this might be family or friends.

The opinions of “friendlies” about your solutions won’t necessarily be actionable since they are not likely your target customer.

But testing with “friendlies” will find logic flaws in your prototype and hypotheses while reducing your stress since you can do a “dry run” before the real interviews.

This saves you making simple mistakes with real users who are harder to find and cost money and time.

Adopt a growth mindset

Product teams are usually too optimistic.

When they create solutions for a customer pain point, they generally believe that the solution will work.

But what if it doesn’t. Or what if it only partially works.

By adopting a growth mindset, we keep an open mind and strive to be truly interested in how the customer responds.

Scientists use the Null Hypothesis [1] framework to keep an open mind.

Null Hypothesis framework: Two hypotheses are proposed: the null hypothesis of no effect and the alternative hypothesis of an effect

At the start of research, scientists assume “no effect”…in other words, that their solution won’t work.

They collect evidence and only after careful analysis would they be convinced that a solution will work.

The alternative hypothesis of an “effect” represents the positive validation of our idea by our target customers.

You can keep an open mind about whether the “effect”/validation is achieved by:

Structuring your prototype and questions to be open ended

Avoiding Yes/No questions

Always presenting multiple solutions

Creating compare and contrast moments

Asking the “Disappointment” and NPS questions

Asking for a form of commitment

Don't allow stereotypes to undermine your discovery experiments

I have heard my teams introduce these kinds of opinions into the ideation process:

"Millennials prefer not to pick up the phone"

"Older users won't use texting to renew their service"

“Male patients want to be treated by male doctors”

These are not necessarily true. And might even be totally wrong. We all have bias so the key is to step back and design our user interviews to have users show us THEIR preferences not just reflect OUR preferences.

One way to avoid bias is to focus the interview on the behavior change desired and not personal characteristics:

For the millennial communication question…instead frame the research with “What are the different forms of communication (phone, text, etc) and which one do users prefer to do the task?”

For the doctor selection question…instead frame the research more generically with “What are the different criteria used to choose doctors and which ones will users select first to find the right doctor for them?”

Summary

When I started interviewing users, I really did struggle to effectively analyze the results.

However, once I started getting a bit more organized and focused, I was able to conduct better interviews and streamline my analysis of what happened during the interview.

Being organized with Primary and Secondary Hypotheses up front meant that I could understand the users intentions in real-time without extensive post-interview re-watching of videos and re-reading of transcripts.

I’ve been able to reduce the time of one interview to 30 minutes and encourage my teams to interview users more often so that they don’t have to cram everything into one interview.

All of these tips will help teams achieve regular user testing and incorporate the customer’s feedback into the product on a continuous basis.

[1] The Null Hypothesis concept was invented in a couple forms in the 1930s by Ronald Fisher, Jerzy Neyman, and Egon Pearson.

Jim is a coach for Product Management leaders and teams in early stage startups, tech companies and Fortune 100 corporations.

Previously, Jim was an engineer, product manager and leader at startups where he developed raw ideas into successful products several times. He co-founded PowerReviews which grew to 1,200+ clients and sold for $168 million. He product-managed and architected one of the first ecommerce systems at Fogdog.com which had a $450 million IPO.

Jim is based in San Francisco and helps clients engage their customers to test and validate ideas in ecommerce, machine learning, reporting/analysis, API development, computer vision, online payments, digital health, marketplaces, and more.

He graduated from Stanford University with a BS in Computer Science.