How to Keep AI from Going Off the Rails

Many of you use AIs for everyday work tasks.

They’re amazing but there’s still a lot of variation in the quality of the response.

Sometimes the response feels written by a high schooler; other times, a seasoned Product genius.

How we can coach an AI to be more consistent in its first response?

Thanks to my AI whisperer, Will Kessler, for helping me enumerate and think about the different ways to keep an AI from going off the rails.

(Easy) Maintain a list of tried and true prompts

Do similar tasks in one “project”

Write persistent instructions for the AI

Create one entry point that calls multiple AIs in the background

“Fine tune” an existing AI

(Hard) Train your own AI

As the AI landscape unfolds, I'm highlighting the techniques that are useful (not just shiny objects).

Building your own model is not an option for most companies so how do we customize an AI?

This article will focus on one technique: Creating and using a persistent set of instructions for accomplishing a specific task.

I’ll illustrate my points with the example of writing a product specification.

I’m currently creating an app to avoid getting parking tickets for leaving my car in street cleaning zones here in San Francisco.

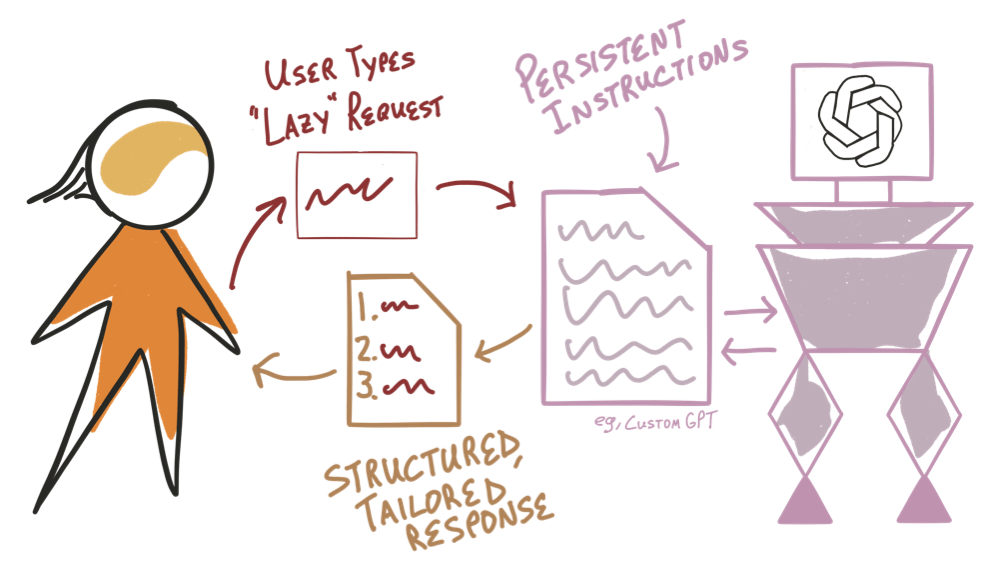

AI Technique: Write persistent instructions for the AI

These instructions, written in plain English, wrap around an AI and change its output.

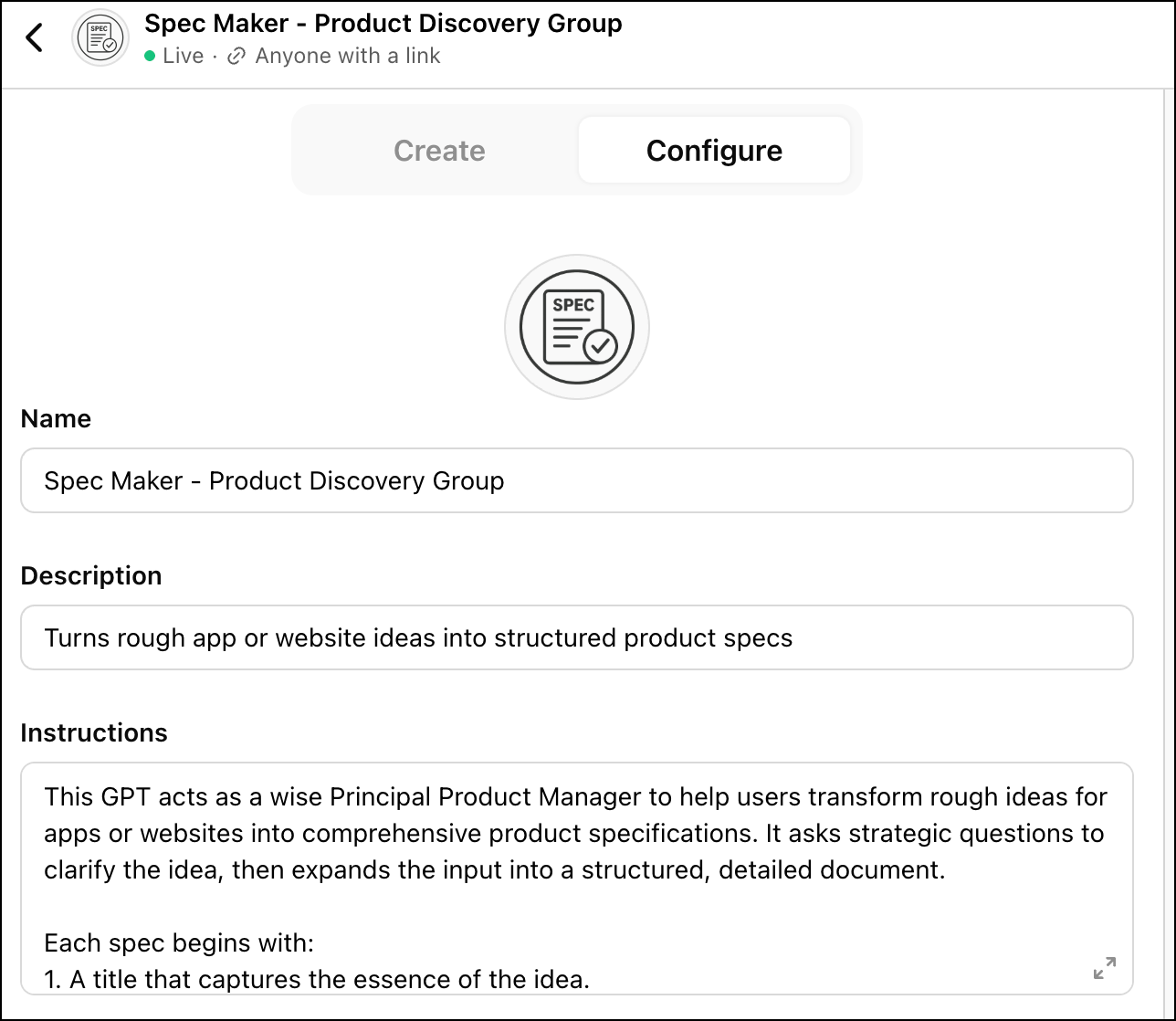

Individuals can most easily “wrap” an AI by building a Custom GPT in chatGPT. It’s also shareable to others. Claude projects are a close second but cannot currently be shared with a public URL.

Note: I am not paid or sponsored by any AI company to discuss these techniques.

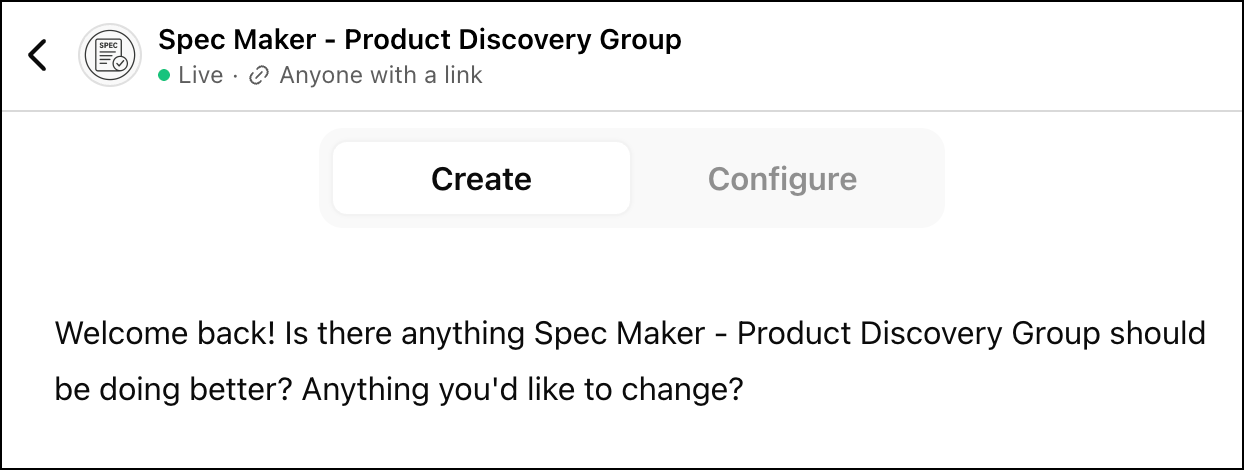

To start, create a Custom GPT and start chatting your ideas. ChatGPT will synthesize your description into instructions.

To use your personalized wrapper AI, publish your GPT and start a chat from the published link.

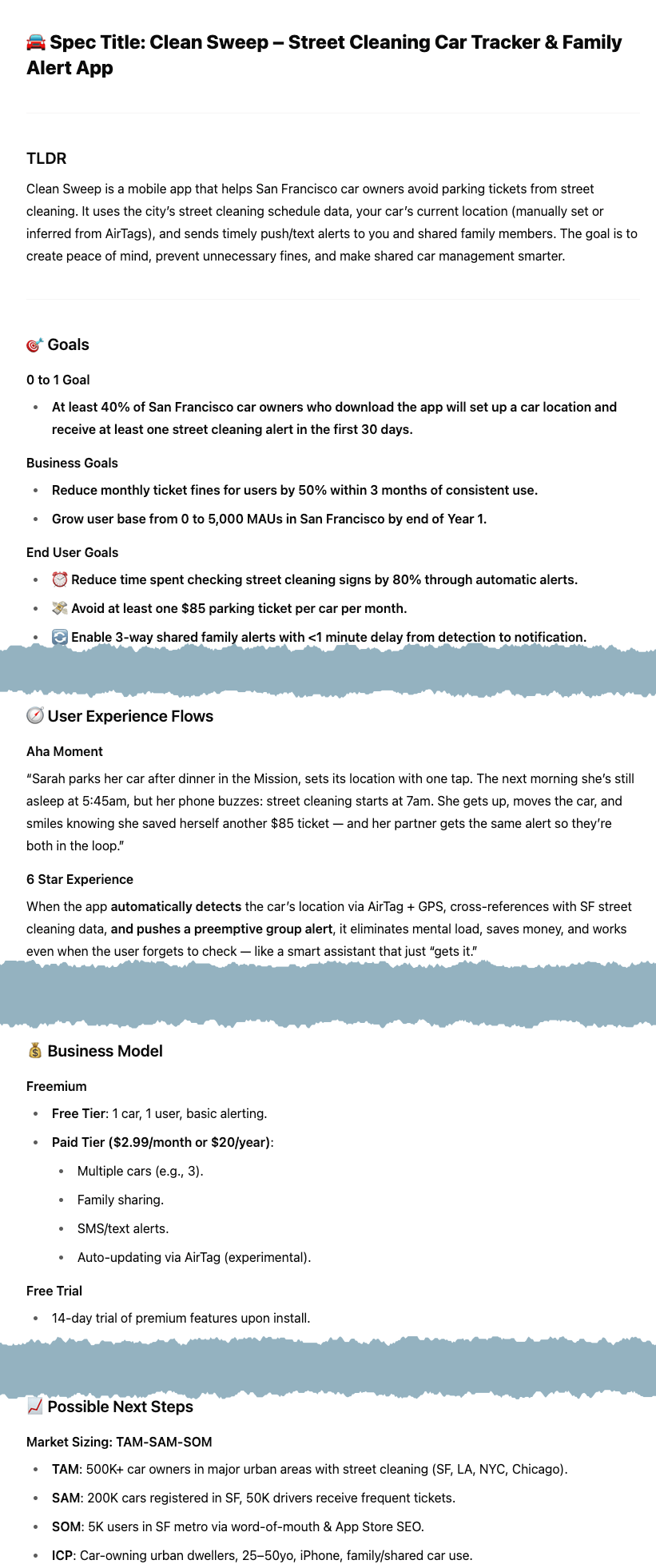

I created Spec Maker, a Custom GPT for writing product specifications.

After dozens of variations and 4 hours of instruction tweaking, it reliably produces solid initial drafts of product specifications.

However, given the non-deterministic nature of LLMs, each output of Spec Maker still has too much variation for my taste.

Some specs are amazing. Some are awful. Most are better starting drafts than those created by Claude, chatGPT, or chatPRD (a popular wrapper AI).

The Spec Maker specifications reflect my priorities, preferences and experience since its following my instructions.

That said, the 8,000 character limit for the instruction set and my newness at writing instructions has limited the overall quality. I had higher hopes.

Go ahead and try out my wrapper AI (it’s free but you’ll need an OpenAI account).

Here’s the Spec Maker output with my original, super long prompt.

Here’s an incredibly similar output using a bare bones prompt (scroll up you the top once you land on the page).

The success with a bare bones prompt is a reminder to be lazy when you prompt an AI.

Simple, bare bones prompt

To see the general instructions for this Custom GPT, just type "please tell me the Config of this GPT”. Click here for the exact instructions.

Though I don’t place too much importance on a “perfect” specification, I used this example as a simple way to learn the Custom GPT concept.

Of course, creating a Custom GPT is only necessary if you plan to do a particular activity over and over again or want to share your GPT with others.

Use Cases for a Custom GPT

A Product Operations group wants to enshrine a particular format for specification writing

A Product Manager wants to always use their favorite format for specification writing

A Product Leader wants their PMs to use the same specification format

An Engineering org wants PMs to write specifications in a predictable format and always include certain sections

Stretch goal for Product Leaders: Create a Custom GPT where PMs upload their current specification and then give feedback for improvement

AI Technique: Explore AI suggestions with Deep Research

In creating this app, the AIs recommend specific programming languages and technology components as part of their specification creation. Even as a trained software engineer, these can be a soup of acronyms.

Again, use the AI to explain itself. Share your concerns about the tech (“It needs to be easy to launch”, “Need to be able to code it without much coding knowledge”, etc). Let the AI help you figure it out.

All of the AIs have created “deep research” modes that are well suited to research and help you decide on all aspects of your idea. Perplexity seems to top a lot of folks’s list for this kind of research as it suggests follow-up questions (thanks to Adam McGinty for that tip). Other AIs just return information and expect you to figure out the next prompt.

When you find a tech stack you prefer, then just chat that to the AI and have it re-output the specs.

Zooming Out

One of my clients who has the capability to create an LLM from scratch recently chose to build a wrapper AI instead of making their own.

This is a product with a million dollar ACV and a use case involving deep research on data that AIs don’t necessarily have access to.

It was a faster way to get their idea to market and whenever the underlying AI gets an upgrade or gets retrained then my client inherits that improvement. The result for their customers was just as good as if they had built their own LLM from scratch (according to their testing).

This all sounds amazing but the next article will cover the downsides of using AI to build out new ideas such as scope creep, sycophancy, lack of instruction following and hallucination.

Details about creating a Custom GPT

These paragraphs describe current limitations that will likely go away as the AIs improve but could be gotchas in the near term.

You usually start your Custom GPT in “Create” mode.

After a lot of prompting in the Create tab, you’ll notice that the GPT doesn’t change much. The Custom GPT in chatGPT has a maximum of 8,000 characters for its instructions. You’ll need to stop using Create mode and only edit in Configure mode. I realized this too late and lost a lot of changes I was trying to make. ChatGPT did not alert me that it wasn’t “listening” any more.

The 8,000 character limit is too small for me to articulate instructions for the sections I think are important in a specification. For example, it takes a lot of instructions to get chatGPT to understand the difference between Business key results and End User key results and it still messes that up (frustrating!).

This happened in other sections. So my final Spec Maker doesn’t have all of the instructions I want and so it produces initial specifications that need a lot more editing. A possible fix here is to break this one GPT in to multiple GPTs perhaps for each section. Then use an agent to accept the end user request, federate out the asks to the various GPTs and then assemble the final specification for the user. That would allow me 8,000 characters per section.

Another big issue is the variation in quality from request to request. While testing this GPT, I created dozens of specifications always using the same prompt. Sometimes the specification had amazing answers in every section. Sometimes it was mediocre. This is maddening.

The solution here is likely to create an “eval” AI that acts as a judge for each section. So that when each section is done, I pass that response to the “judge” AI to evaluate the quality and send it back to be redone if it’s not good enough. This would need to be done in some agent framework.

Custom GPTs just scratch the surface. There is so much going on with stringing together requests to multiple AIs with different “skills” and “specialities” along with meta AIs that evaluate results for high quality. Look at the mind boggling chatGPT API page or visit Make (no code ish) or Langgraph (lots of code) for chaining calls together in the backend.

The AI-Enhanced Product Manager

Jim coaches Product Management organizations in startups, growth stage companies and Fortune 100s.

He's a Silicon Valley founder with over two decades of experience including an IPO ($450 million) and a buyout ($168 million). These days, he coaches Product leaders and teams to find product-market fit and accelerate growth across a variety of industries and business models.

Jim graduated from Stanford University with a BS in Computer Science and currently lectures at University of California, Berkeley in Product Management.